What happens when AI breaks the language barrier in foreign movies?

A night at the cinema with AI that manipulates film

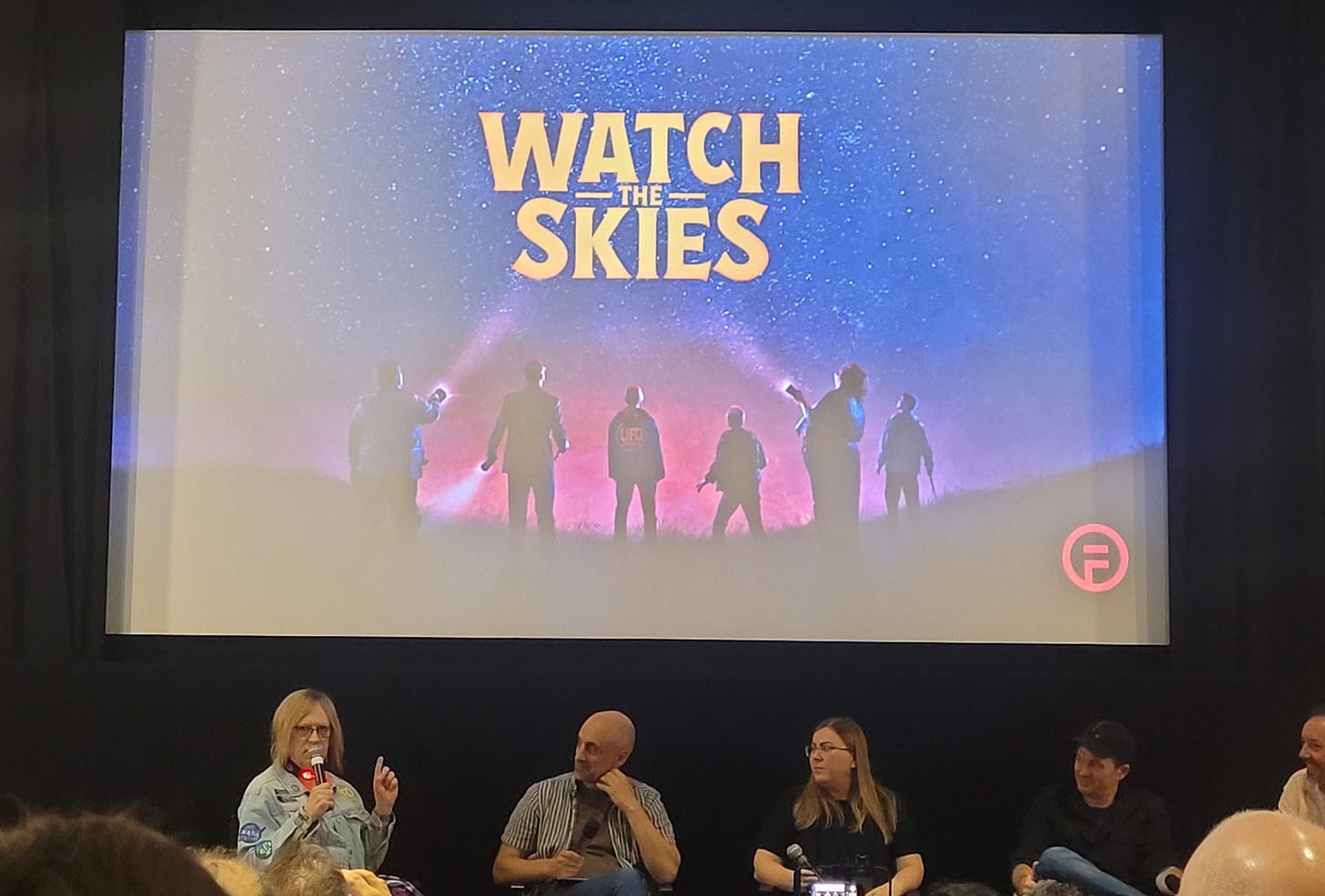

I’ll admit I turned up for the London screening of Watch the Skies completely ignorant. All I saw in the description as my eyes scanned the page was “sci-fi” and “aliens” and I was sold (I am easy to please). It was only when I turned up and got chatting with filmmakers and film editors that I learned I was about to watch a Swedish movie dubbed into English — using AI.

But this wasn’t the typical dubbing process where the audio never matches the actors’ lip movements. Flawless, the company behind the technology, uses AI tools called TrueSync and DeepEditor to manipulate actors’ performances so it looks as if they are actually speaking the language.

All of this sounded brilliant in theory. There have been so many foreign films I’ve wanted to watch but never quite managed, mostly because of the mental juggling act it takes to flip between subtitles and the action. After a long day, sometimes the concentration just isn’t there. But I was also sceptical — there are so many nuances between languages in terms of rhythm and emotional tone. Could AI really handle it?

“Sort of,” one film editor told me during our pre-screening chat. “The AI does most of the work in syncing everything up, and then we fine-tune the rest of the mouth movements.”

So, did it actually work?

It was pretty great to sit back and immerse myself fully in the movie without having to worry about missing crucial plot points while reading subtitles. Still, knowing the movie was dubbed, I found myself scrutinising every micro movement like a detective. Sometimes, it really was convincing; other times, the actors lips were barely moving. But I haven’t seen the original so I can’t make a true comparison. It probably would have been better if I hadn’t known about the dubbing.

Overall, I enjoyed the movie and I don’t feel like the dubbing got in the way of that. I also feel that certain genres, like sci-fi and action movies would work really well for dubbing, but I imagine it would be trickier with intimate character dramas or films that rely heavily on subtext and precise timing.

What impressed me about their approach

During the Q&A session afterward with Flawless writer-director Scott Mann and the editing crew, it became clear they’re approaching this technology thoughtfully, especially from a user experience perspective. Scott emphasised that it’s a truly collaborative process—actors give full consent and are involved throughout. Sometimes, he said, rewriting dialogue for the dubbing process actually improved scenes beyond the original.

This ethical approach extends to several key areas:

full consent: the actors are fully involved in the process

wider audience and accessibility: dubbing opens up the foreign movie market to a wider audience, including people with visual impairments who find it challenging to keep up with subtitles

transparency: there’s no hidden AI problem here

Navigating the regulatory stuff

Flawless classifies their work as “assisted AI” not “generative AI”. This is an important distinction as fully generative AI faces greater consequences. While the EU AI Act doesn’t specifically address “AI-dubbing” it does say that anyone manipulating audio or video content must disclose it. China’s new labelling regulations are even stricter, requiring explicit and implicit labels for AI-generated content. By being transparent about their process, Flawless seems to be on the safer side of these evolving regulations—for now.

Will AI-labeled content affect enjoyment?

Studies suggest most people exhibit an anti-AI bias: seeing the “AI-created” label can create a mental block that prevents deep engagement, especially when judging meaning or value. But it does really depend on what your own personal view of AI is. If you already have a positive view of AI - it won’t create a mental block and you will be able to appreciate it for what it is.

It’s a double-edged sword. I believe transparency about AI use is important, but does knowing the technical details really matter if I enjoy the content anyway? And will that knowledge prevent full immersion in the story?

The film industry is clearly grappling with these questions. The Brutalist recently faced a lot of backlash following revelations it used generative AI to refine Hungarian pronunciation in the film. One viewer was so upset and tweeted: “The Brutalist AI shit makes me so sad because how many times will this happen in the future where I see some beautifully crafted movie and find out it hid AI in parts just to cheap out without soul”.

I see it differently. Film editors already use countless tools to create movie magic—AI tools are just another addition to the toolbox. But I understand the concern about a slippery slope, and clearly, for some people, any AI involvement triggers deep-rooted fears or resistance.

The audience seems split too. In a recent YouGov survey, only 23% of Britons think automated voice dubbing of films is completely acceptable. Director Alfonso Cuarón has spoken against dubbing entirely, arguing it’s “great to listen to the specific sound and music of every language.”

Final thoughts

I’m excited about the potential here - this could genuinely help more people discover amazing foreign films they’d otherwise skip. But there’s something special about hearing unfamiliar languages, not fully understanding but still being able to grasp certain nuances and being drawn in anyway. Maybe sometimes, the effort is worth it.